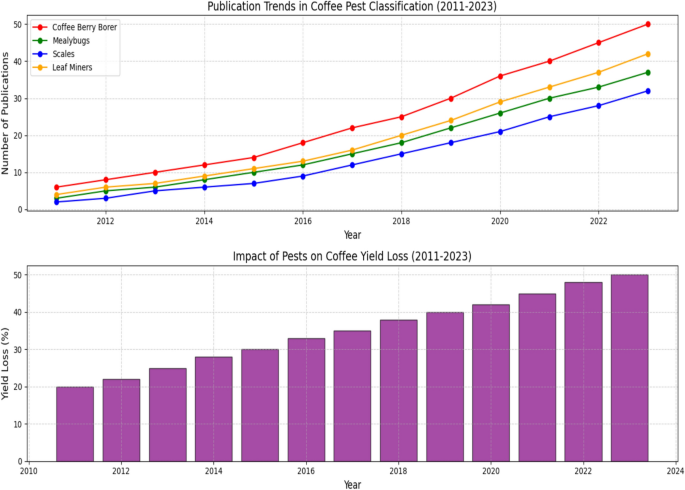

The principal goal of this analysis research is to foretell the espresso plantation pest illness utilizing Imaginative and prescient GNN. The contemporaneous strategies for predicting espresso plant illness primarily based on present and previous pointers have imprecise outcomes. The recommended method has efficiently addressed all the aforementioned limitations and obtained a predictable end result in predicting the precise illness affected and offering suggestions for coffee-cultivating farmers.

Information preprocessing and augmentation

Using information preprocessing and augmentation strategies is of utmost significance within the coaching of a proficient HV-GNN mannequin for the well timed identification of espresso crop killers20. Picture dimension for forming graph construction ranges from 224 × 224. Picture high quality with minimal compression artifacts is most well-liked. Picture resizing in espresso plant fatigued photographs might fluctuate. For optimum processing, the mannequin wants equal image sizes. Scaling and cropping assure all photographs match the mannequin’s enter dimensions. Picture information normalization sometimes adjustments pixel values as a consequence of illumination. Normalization strategies like eradicating the imply or scaling pixel values to a spread (0–1) focus the mannequin on important image content material fluctuations somewhat than absolute depth ranges. Digital camera sensors and mud particles might trigger noise in photographs Eq. 1 the place (i,j) represents pixel coordinates inside the filter kernel.

$$Y = (Sigma X(i, j)) / Ok^2$$

(1)

Filtering might improve mannequin information high quality and decrease noise. Rotation & Flipping photographs could also be rotated or flipped horizontally/vertically to simulate espresso plant views from numerous angles. This strengthens the mannequin towards image orientation adjustments. Colour Jitter Eq. 2 considerably adjusting coloration stability (brightness, distinction, saturation) might generate a“digital” that mimics pure lighting situations. Colour jittering entails randomly adjusting picture properties like brightness (δB), distinction (δC), saturation (δS), and hue (δH) inside a predefined vary.

$$Ne{w}_{Brightness}= Origina{l}_{Brightness}+ delta B$$

(2)

The mannequin can higher generalize to new information. Random cropping from unique photographs lets the algorithm study by analyzing numerous plant parts, maybe enhancing localized harm detection.

Characteristic extraction utilizing Convolutional Neural Community (CNN)

Convolutional Neural Networks (CNNs) have important efficacy within the area of image characteristic extraction. The enter picture is subjected to learnable filters, typically generally known as kernels, utilizing convolutional layers. The filters traverse the image, analyzing particular person components sequentially. Each filter inside the image is designed to establish distinct patterns or traits, resembling edges, traces, kinds, or textures. A convolutional layer generates a characteristic map as its output.

$$Activation Map left(i, jright)= Sigma left( W left(okay, lright)* Enter left(i + okay, j + lright)+ shiny)$$

(3)

the place, i, j: Point out the place inside the characteristic map (output of the convolutional layer), okay, l: Point out the place inside the filter (kernel) of the convolutional layer Eq. 3, W (okay, l): Represents the load worth at place (okay, l) within the filter, enter (i + okay, j + l): Represents the pixel worth at place (i + okay, j + l) within the enter picture, b: Represents the bias time period for the precise filter, and Σ: Represents summation over all parts inside the filter. This phenomenon signifies the existence of the traits recognized by the filters over the entire of the image. Varied filters are used inside every layer, ensuing within the technology of varied characteristic maps, every of which emphasizes sure aspects of the image. CNNs typically use stacked layers, which encompass many convolutional layers organized in a vertical stack. As one progresses by means of the varied ranges, the extent of complexity proven by the traits being acquired will increase. Decrease layers purchase elementary traits resembling borders and textures Eq. 4.

$$New Characteristic left(iright)= f left( Present Characteristic left(iright), Sigma Aggregated Options left(neighbors of iright)proper)$$

(4)

the place i: Represents the precise picture node (espresso plant) within the GNN, f: Represents a non-linear operate that updates the characteristic primarily based on present options and aggregated options from neighbors. This operate can contain learnable parameters., Present Characteristic (i): Represents the extracted options from the CNN for the i-th picture (espresso plant). Σ Aggregated Options (neighbors of i): Represents the mixed options from neighboring picture nodes after some aggregation operate (e.g., averaging, learnable weights). Superior layers combine these traits to establish intricate patterns and objects. Convolutional layers regularly embody pooling layers to cut back dimensionality. N-Max and common pooling summarize native characteristic map data. This minimizes community parameters and controls overfitting21.

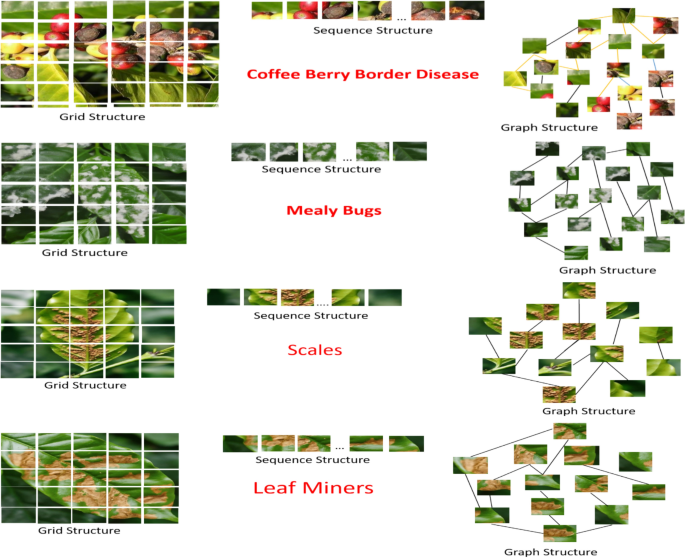

Graph development

The newest imaginative and prescient transformer transforms photographs into patches. For instance, ViT breaks a 224 × 224 image into 16 × 16 patches, producing a 196-length sequence as proven in Fig. 2. Grid construction picture is separated into a daily grid of pixels, every containing coloration depth. A fundamental and environment friendly grid format fails to seize non-neighboring pixel interactions. Some approaches characterize photographs as sequences of smaller patches. These patches are extracted from the picture and processed sequentially. Within the proposed graph construction, Nodes are the person pixels, patches extracted from the picture, and even whole areas of curiosity that may be represented as nodes within the graph. Edges are related (relationships) between nodes are represented by edges. These edges will be primarily based on spatial proximity (neighboring pixels/patches) or different components like spatial proximity, semantic relationship, and similarity in coloration or texture.

Graph processing and have rework with GNN

The Graph Neural Community (GNN) engages in iterative updates of the options of every node by means of many rounds of message switch. Message passing throughout every cycle, a node’s neighbors ship it messages which can be primarily based on the properties of the close by node and the weights of the sides that join them. These messages compile information in regards to the native surroundings. Edges between nodes present their relationship. GNNs can study edge weights throughout coaching. The mannequin robotically determines which node connections are most informative for illness prediction. Area data will be utilized to generate edge weights that join neighboring pixels with a better weight than distant areas. Each message passing round, a node receives messages from its neighbors. These messages rely on surrounding nodes’ traits and edge weights Eq. 4.

$$Message left(i, jright)= f left( Characteristic left(jright), Edge Weight left(i, jright)proper)$$

(5)

The place, f: learnable operate that determines how the neighbor’s characteristic (data) is remodeled primarily based on the sting weight. Characteristic (j): characteristic vector of the neighboring node (j). Edge Weight (i, j) weight related to the sting connecting node (i) to its neighbor (j). After receiving messages from all neighbors, node (i) updates its characteristic vector. This replace considers each the unique characteristic and the aggregated data from the messages. Characteristic Transformation by combining its unique CNN-extracted options with the aggregated messages from its neighbors. This permits the GNN to contemplate the context of a selected area (e.g., a leaf) about its surrounding areas (stems, different leaves). Graph Neural Networks (GNNs) exhibit important flexibility and may regulate to information with various sizes, buildings, and relationships, making them significantly acceptable for the heterogeneous and dynamic conditions seen in agricultural settings22.

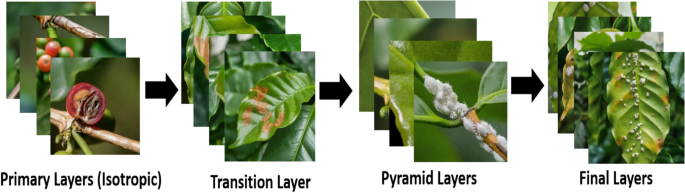

Multi-Layer hybrid community structure

A multi-layer HV-GNN structure predicts espresso plant ailments utilizing isotropic and pyramid strategies. The community structure consists of a number of GNN layers, with every layer probably having a special structure. An isotropic structure is utilized in Early Layers. All community nodes use the identical message-passing mechanism to study broad relationships and native options close to them. It permits one to seize typical illness indicators like smaller lesions or textural alterations as illustrated in Fig. 3.

Transition Layer is between pyramid and isotropic layers in Eq. 5, the place, m_i^{(l)} is the message acquired by node i in layer l. AGGREGATE is a operate that mixes the options of neighboring nodes j within the earlier layer (l- 1). This is usually a summation, imply, or a extra advanced learnable operate. N(i) is the set of neighbors of node i. f_j^{(l- 1)} is the characteristic vector of node j in layer l- 1.

$$m_i ^{ wedge}{(l)} = AGGREGATE ( { f_j^{ wedge}{(l-1)} | j in N(i) } )$$

(6)

The pyramid layers use Node Coarsening to group quite a few nodes from the earlier layer into one. This limits graph decision however preserves key data. To organize for pyramid layer processing, the clustered nodes’ traits are built-in. The following layers are pyramidal. Decrease Pyramid Layers: Measure options from a smaller neighborhood round every node. This permits exact examination inside a area, which can reveal delicate sickness indicators.

$$m_i^{wedge}{(l)} = AGGREGATE ( { f_j^{wedge}{(l-1)} | j in N_k(i) } ) * W_k^{wedge}{(l)}$$

(7)

Equation 6 the place N_k(i) is the set of neighbors of node i inside its particular receptive subject dimension okay in layer l. This receptive subject dimension can enhance as we transfer up the pyramid. W_k^{(l)} is a learnable weight vector particular to receptive subject dimension okay in layer l. This permits the community to prioritize data primarily based on the size. Increased Pyramid Layers can take up extra context-relevant data as a consequence of wider receptive fields. Capturing larger-scale illness traits or leaf well being requires this. These layers’ message-carrying capabilities could also be custom-made to completely different receptive fields and scale-aware strategies. Primarily based on espresso plant illness prediction wants, isotropic ultimate ranges can have one pyramid layer. These layers refine node representations utilizing native and world information23.