Properly… possibly.

But when the approaching world is zero-sum, then both machine+human groups or else simply machines who’re higher at gathering assets and exploiting them will merely ‘win.’

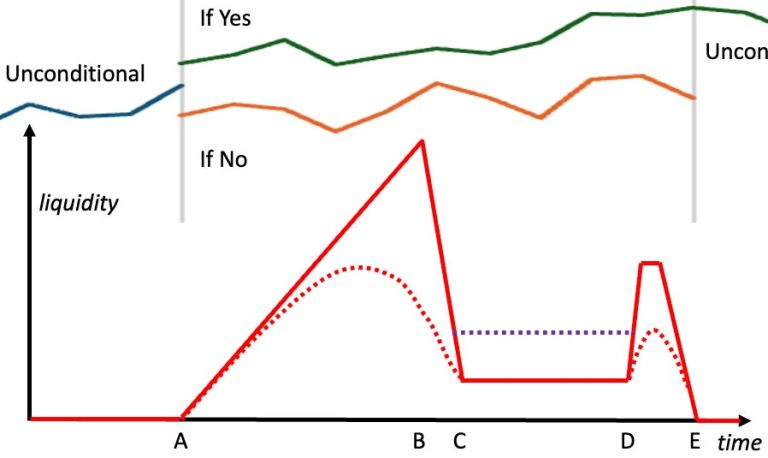

“Can circumstances and incentives be arrange, in order that the patterns which might be strengthened are positive-sum for the best number of individuals, together with legacy-organic people and the planet?”

You already know the place that all the time leads me – to the irony that positive-sum techniques are typically inherently aggressive, although beneath equity rule-sets that we have witnessed reaching PS during the last couple of centuries.

In distinction, alas, this different Noēma essay about AI is an extended and eloquent whine, contributing nothing helpful.

== Let’s attempt to parse this out logically and easily ==

I maintain coming again to the wisest factor ever mentioned in a Hollywood movie: by Clint Eastwood as Soiled Harry in Magnum Power.

“A person’s acquired to know his limitations.”

Amongst the entire traits we see exhibited within the fashionable frenzy over AI, the one I discover most annoying is what number of people appear so positive they’ve it sussed! They then prescribe what we ‘ought to’ do, through laws, or finger-wagged moralizings, or capitalistic laissez faire…

… whereas ignoring the one software that acquired us right here.

…. Reciprocal Accountability.

Okay. Let’s parse it out, in separate steps which might be every exhausting to disclaim:

1. We’re all delusional to a point, mistaking subjective perceptions for goal info. Present AIs aren’t any exception… and future ones possible will stay so, simply expressing their delusions extra convincingly.

2. Though massively shared delusions occur – typically with dire outcomes – we don’t usually have similar delusions. And therefore we are sometimes in a position to understand one another’s, even once we are blind to our personal.

Although, as I identified in The Clear Society, we have a tendency to not prefer it when that will get utilized to us.

3. In most human societies, one topmost precedence of rulers was to repress the sorts of free interrogation that might break via their personal delusions. Critics had been repressed.

One results of criticism-suppression was execrable rulership, explaining 6000 years of hell, known as “historical past.”

4. The foremost improvements of the Enlightenment — that enabled us to interrupt freed from feudalism’s fester of large error – had been social flatness accompanied by freedom of speech.

The highest pragmatic impact of this pairing was to disclaim kings and owner-lords and others the facility to flee criticism. This mixture – plus many lesser improvements, like science – resulted in additional speedy, accelerating discovery of errors and alternatives.

Once more: OpenAI’s new mannequin tried to keep away from being shut down.

SciFi can inform you the place that goes. And it’s not “machines of loving grace.”

6. Above all, there isn’t a method that natural people or their establishments will be capable to parse AI-generated mentation or decision-making shortly or clearly sufficient to make legitimate judgements about them, not to mention detecting their persuasive, however probably deadly, errors.

We’re like aged grampas who nonetheless management all the cash, however are attempting to parse newfangled applied sciences, whereas taking some teenage nerd’s phrase for all the things. New techs which might be — just like the proverbial ‘sequence of tubes’ — far past our direct capability to grasp.

Need the nightmare of braggart un-accountability? To cite Outdated Hal 9000: ““The 9000 sequence is essentially the most dependable pc ever made. No 9000 pc has ever made a mistake or distorted info. We’re all, by any sensible definition of the phrases, foolproof and incapable of error.”

Luckily there are and might be entities who can sustain with AIs, regardless of how superior! The equal of goodguy teenage nerds, who can apply each approach that we now use, to trace delusions, falsehoods and probably deadly errors. You already know who I’m speaking about.

7. Since practically all enlightenment, positive-sum strategies harness aggressive reciprocal accountability…

… I discover it mind-boggling that nobody within the many fields of synthetic intelligence is speaking about making use of comparable strategies to AI.

The complete suite of efficient methodologies that gave us this society – from whistleblower rewards to adversarial court docket proceedings, to wagering, to NGOs, to streetcorner jeremiads – not one has appeared in any of the suggestions pouring from the geniuses who’re bringing these new entities — AIntities — to life, far sooner than we organics can probably modify.

Given all that, it might appear that some effort ought to go into growing incentive techniques that promote reciprocal and even adversarial exercise amongst AI-ntities.

Rivals who may earn rewards and/or assets through ever-improving skills to trace one another…

… incentivized to denounce possible malignities or errors…

… and to grow to be ever higher at explaining to us the crucial ethical selections we should nonetheless make.

It is solely the precise methodology that we already use

… with a view to get the perfect outcomes out of already-existing feral/predatory and supremely genius-level language techniques known as attorneys…

… by siccing them onto one another.

The parallels with present strategies would appear to be actual and already completely laid out…

… and I see no signal in any respect that anybody is even glancing on the enlightenment strategies which have truly labored. So Far.

== He is baaack… with extra completely happy ideas ==

Oh what typical Yudkowsky ejaculation! Right here is Eliezer (and co-pilot) at his greatest.

If Anybody Builds it Everybody Dies.

Oh, gotta hand it to him; it is an excellent title! I’ve seen earlier screeds that shaped the core of this doomsday tome. And positive, the warning must be weighed and brought severely. Eliezer is nothing if not brainy-clever.

The truth is, if he’s proper about absolutely godlike AIs being inevitably deadly to their natural makers, then we’ve a high-rank ‘Fermi speculation’ to elucidate the empty cosmos! As a result of if AI may be executed, then the one strategy to forestall it from occurring – in some secret lab or basement interest store – could be an absolute human dictatorship, on a scale that may daunt even Orwell.

Whole surveillance of the complete planet.

… Which, in fact, may solely actually be completed through state-empowerment of… AI!

From this, the ultimate steps to Skynet could be trivial, both executed by the human Large Brother himself (or the Nice Tyrant herself), or else by The Resistance (as in Heinlein’s THE MOON IS A HARSH MISTRESS). And therefore, the exact same Whole State that was made to forestall AI would then grow to be AI’s ready-made tool-of-all-power.

To be clear: that is precisely and exactly the plan at present in-play by the PRC Politburo.

It is usually the basis-rationale for the final guide written by Theodore Kaczynski – the Unabomber – which he despatched to me in draft – demanding an finish to technological civilization, even when it prices 9 billion lives.

What Eliezer Yudkowsky by no means, ever, may be persuaded to treat or ponder is how clichéd his situations are. AI will manifest as both a murderously-oppressive Skynet (as in Terminator, or previous human despots), or else as an array of company/nationwide titans endlessly at struggle (as in 6000 years of feudalism), or else as blobs swarming and consuming in all places (as in that Steve McQueen movie)…

…the Three Traditional Clichés of AI — all of them hackneyed from both historical past or film sci fi or each — that I dissected intimately, in my RSA Convention keynote.

What he can by no means be persuaded to understand – even with a view to criticize it – is a 4th choice. The tactic that created him and all the things else that he values. That of curbing the predatory temptations of AI in the exact same method that Western Enlightenment civilization managed (imperfectly) to curb predation by super-smart natural people.

The… very… identical… methodology may truly work. Or, no less than, it might appear price a strive. As a substitute of Rooster-Little masturbatory ravings that “We’re all doooooomed!”

—-

And sure, my method #4… that of encouraging AI reciprocal accountability, as Adam Smith beneficial and the way in which that we (partly) tamed human predation… is completely suitable with the final mushy touchdown we hope to attain with these new beings we’re creating.

Name it format #4b. Or else the final word Fifth AI format that I’ve proven in a number of novels and that was illustrated within the beautiful Spike Jonz movie Her…

…to lift them as our youngsters.

Doubtlessly harmful, when youngsters, however usually responsive to like, with love. Main maybe to the best envisioned mushy touchdown of all of them. Richard Brautigan’s “All watched over by Machines of Loving Grace.”