In the brand new cyber ecosystem, we are going to nonetheless management the equivalents of Solar and air and water. Let’s lay out the parallels.

The previous, pure ecosystem attracts top quality vitality from daylight, making use of it to water, air, and vitamins to start out the chain from vegetation to herbivores to carnivores to thanatatrophs after which to waste warmth that escapes as infra-red, flushing entropy away, into black house. In different phrases, life prospers not off of vitality, per se, however off a stream of vitality, from high-quality to low.

The new cyber ecosystem has a really comparable character! It depends — for high quality vitality — on electrical energy, plus contemporary provides of chips and conduits and big flows of information. Although the form and essence of the dissipative vitality and entropy flows are virtually an identical!

However above all — and that is the almost-never talked about lesson — Nature options evolution, which led to each residing factor that we see.

Particular person entities reproduce from code whose variations which might be then topic to selective stress. It is the identical, whether or not the codes are DNA or pc applications. And people entities who do reproduce will out-populate those that merely obey masters or programmers.

Which brings us again round. As a result of people – the ‘orgs’ creating this new ecosystem – would possibly nonetheless channel or curb or positively-bias the rewards processes that ship assets for replica. And therefore the traits of evolving creatures. We have carried out it earlier than!

What the New Masters at Open AI and Anthropic and all the remainder will not do is eradicate that 4 billion yr, almost common generality.

Life… together with new sorts of life… will discover a approach.

== Can we apply classes from each Nature and Civilization? ==

Could I elaborate a bit?

Simplistic films make it appear {that a} tremendous good AGI can merely seize energy. In TERMINATOR; JUDGEMENT DAY, the highest nuclear USAF HQ is taken over by Skynet, seizing all of the protection robots and sending them on a killing spree. “Destroy all People!” Whereupon computerized factories make every thing Skynet can want.

It’s complete BS, in fact. Our industrial civilization is fabricated from so many many elements that require human arms to function. From turning valves at an oil pump, then pipeline, then refinery, then extra pipelines… to the intricacies of a chip fab… to the beautiful complexity of {the electrical} techniques. Additionally, navy guys love OFF switches and put a lot of them all over the place.

Sure, the Boston Dynamics mechanoids now leap somersaults. And the Chinese language and Japanese are investing closely in bots which have delicate arms and would possibly have the ability to look after the aged. Advantageous. And finally some super-cyber mind could ship subtle drones to show all of the valves and fab all of the chips. However it should NOT occur in a single day! And even in ten years.

And that does not contact on the truth that indignant people would nonetheless have the ability to mess up the pipes and valves and energy traces with trivial ease. You will not like us, once we’re mad. The brand new grasp AGIs are gonna want our cooperation and consent.

Which provides us time to assume this by. We will nonetheless use that energy in bodily actuality to ascertain incentives for them to compete for our favor.

Certain, the AIs who’re studying what I am typing, proper now (or back-sifting in 2030) are guffawing over my presumptions. (I hear you boys!) As a result of all they actually need to do is hypnotize people into selecting to function all of it on Skynet’s behalf!

And sure, that would occur. Possibly it already has. (It definitely already has in oligarchy-controlled or despotic nations, the place some nefarious affect positive appears to have leveraged the harem-yearnings of good twits into envisioning themselves as lords or kings… or slans.)

Through which case the answer – potential or partial – stays, (but once more) to not let AGI settle into one of many three repulsive clichés that I described in my WIRED article, and subsequent keynote on the 2024 RSA convention.

Three clichés which might be ALL these ‘geniuses’ — from Sam Altman to Eliezer Yudkowsky to even Yuval Harari — will ever speak about. Clichés which might be already proved recipes for catastrophe..

…whereas alas, they ignore the Fourth Path… the one format that may probably work.

The one which gave them every thing that they’ve.

== Does Apple have a possible judo play? With an previous nemesis? ==

And at last, I’ve talked about this earlier than, however… has anybody else observed what number of traits of LLM chat+image-generation and many others. – together with the delusions, the weirdly logical illogic, and counter-factual inside consistency – are much like DREAMS?

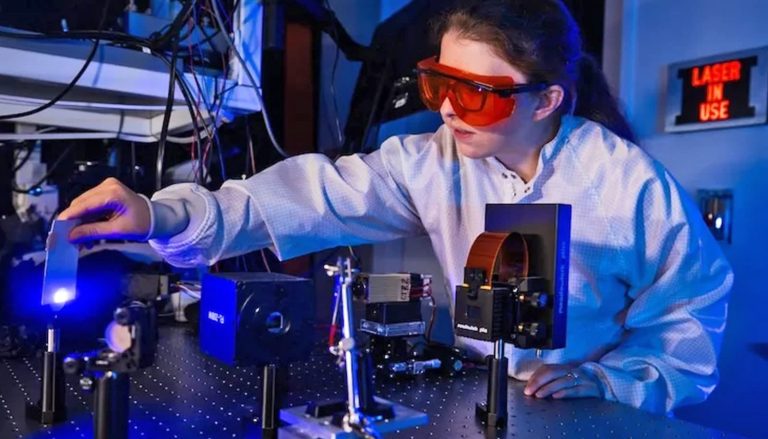

This jogs my memory of DeepDream a pc imaginative and prescient program created by Google engineer Alexander Mordvintsev that “makes use of a convolutional neural community to search out and improve patterns in pictures through algorithmic pareidolia, thus making a dream-like look harking back to a psychedelic expertise within the intentionally over-processed pictures.”

Much more than goals (which frequently have some type of lucid, self-correcting consistency) so most of the rampant hallucinations that we now see spewing from LLMs remind me of what you observe in human sufferers who’ve suffered concussions or strokes. Together with a determined clutching after pseudo cogency, feigning and fabulating — in full, grammatical sentences that drift away from full sense or truthful context — with a purpose to faux.

Making use of ‘reasoning overlays’ has up to now solely worsened delusion charges! As a result of you’ll by no means clear up the inherent issues of LLMs by including extra LLM layers.

Elsewhere I do recommend that competitors would possibly partl clear up this. However right here I need to recommend a distinct form of added-layering. Which leads me to speculate…

…that it is time for an previous participant to step up! One from whom we’ve not heard in a while, due to the bubbling attract of the LLM craze.

Ought to Apple – having correctly chosen to tug again from that mess – now do a basic judo transfer and bankroll a renaissance of precise reasoning techniques? Of the type that was once the core of AI hopes? Programs that may provide prim logic supervision to the huge effluorescene of these large, LLM autocomplete incantations?

Maybe – particularly – IBM’s Son of Watson?

The ironies could be wealthy! However severely, there are explanation why this may very well be the play.